One good sign of a successful business is its need to extract information from various sources

It implies a diversity of data types that need to come together to provide a complete picture of an enterprise’s moving parts. It also means the business needs to use one – or more – data mapping tools and software to extract information composed of multiple inputs and piece them together to get accurate information in real-time.

Here is our list of the best data mapping software:

- Ketch EDITOR’S CHOICE This cloud platform automates GDPR compliance by discovering, classifying, and tracking personal data across systems. It provides real-time visibility and AI-driven risk insights that simplify audits, ROPAs, and regulatory responses. Access a free trial.

- Fivetran HVR This data integration tool automates the extraction and loading of data from multiple sources into data warehouses, simplifying ETL processes and ensuring data synchronization. It can be used to move and transform data. Available as a SaaS package or for self-hosting on Windows or Linux.

- HVis A data mapping tool for larger organizations that have to deal with bulkier volumes of data; it is highly flexible and fits into any architecture, regardless of its diversity or complexity.

- Boomi AtomSpheris An on-demand multi-tenant cloud integration platform for syncing and replicating data; it is easy to master and comes with templates that allow novices to master the pipelining process.

- CloverDX A “universal” data mapping tool that connects data and destination regardless of the platforms, network architecture, and applications involved; it can be used to design, create, and deploy data flows for a wide array of scenarios.

- IBM InfoSphere DataStage A data mapping tool from one of the leaders in the data storage industry that integrates a wide range of data; it also helps that the tool combines with IBM’s vast array of data management tools.

- Oracle Data Integrator Another product from one of the biggest data storage technology companies; this tool is ideal for larger businesses looking for multi-dimensional data organization, mapping, and storage.

- Pimcore Product Information Management (PIM) This mapping tool binds all data found on a network and brings it together as real-time information; it works seamlessly with a wide array of enterprise systems and technologies.

- Talend Data Integration This mapping tool works with enterprise data located in any type of architecture; it unifies, integrates, transforms, and deploys it wherever it is required to after ensuring it is clean.

What is data mapping?

Data mapping is the term used to describe the plotting of a flowchart that shows the process involved in taking data from various sources, processing or parsing it into required formats, and sending it on to be stored in one or more target databases.

It also involves matching data fields or elements from one or more source databases to their corresponding or intended data fields in other target databases.

Data mapping is a critical part of data management and is used in processes like Data Integration, Data Transformation, Data Migration, and Data warehousing.

Data mapping also helps to combine data into a destination system by extracting, transforming, and loading (ETL) it. As a result, businesses can use the mapped data for producing deeper, all-inclusive insights into their processes’ currency and efficiency. It allows them to organize, distill, analyze, and understand vast amounts of data in numerous locations and extract comprehensive and all-inclusive information.

What is a data mapping tool?

Data mapping software and tools are used to plan and design the flow of data ETL by taking the correct data sets from the correct sources, transforming them into expected formats, and uploading them into their corresponding target databases.

Once they have been configured, these tools continue to automatically perform the data mapping based on schedules or triggering conditions.

Why do we need data mapping?

There are times when it is necessary to use data mapping tools and software, including:

- Administrators might need to automate their data mapping process to cater to multiple data migration schedules instead of writing scripts or manually importing data each time they need to extract data.

- Using these tools ensures data that needs parsing is converted correctly and the correct data goes to the right destination – every single time.

- They make it easier to integrate, transform, and move mission-critical data and create data warehouses.

- The connection between target and source databases can become permanent – these tools can help keep the live connection

- They keep data clean – they can be configured to trigger alerts when any inconsistencies are spotted.

- Most of them come with insightful and interactive reports that can be shared with all stakeholders.

The bottom line is that these tools cut human error and help deliver all-inclusive information in real-time.

What are the features that make for a good data mapping tool?

Let’s have a look at some features to look for in professional data mapping tools and software:

- Ease of use – features like graphical user interfaces, drag-and-drop operation, and code-free configuration make for great tools.

- Automation – they should also make it easy to create workflows, schedules, and triggers.

- Instant preview – when the tools allow users to view data in both raw and processed formats, it is easier to prevent mapping errors during design time.

- High compatibility – in today’s world of complex data types, diversity of software, and hybrid configuration of networks, it makes sense for mapping tools to handle complex design requirements and work with a wide array of technologies.

- Price – it is always important that any software solution, not just data mapping tools, be worth the investment, i.e., positive ROI.

The tools we have included on our list have been rated based on these and more points.

Our methodology for selecting the best Data Mapping Software Software:

We’ve broken down our analysis for you based on these key criteria:

- Ease of Use and Intuitiveness: Prioritizes software that features a user-friendly interface, making it accessible to users of all technical skill levels.

- Integration Capabilities: Highlights tools that offer extensive integration options with a variety of data sources and platforms, ensuring flexibility in data handling.

- Advanced Mapping Features: Focuses on software that provides comprehensive mapping functionalities, including transformation rules, conditional logic, and automation capabilities.

- Scalability: Considers whether the software can efficiently handle growing amounts of data and complexity as business needs expand.

- Support and Documentation: Values the availability of robust support options and thorough documentation to assist users in maximizing the tool’s capabilities.

The best Data Mapping software

Let’s go ahead and have a look at the seven best data mapping tools and software:

1. Ketch (FREE TRIAL)

Ketch is a consent management platform that is mainly designed for use by websites. The system’s data mapping feature helps businesses understand and manage their data footprint across various platforms, providing a comprehensive view of data risks. This tool allows organizations to map the flow of data from collection through processing to ensure compliance with privacy regulations like GDPR and CCPA.

Key Features:

- Automated Data Discovery: AI-powered tools automatically discover and map data across complex enterprise systems.

- Centralized Data Classification: Machine-driven, inference-based data classification with central reference storage.

- Continuous Monitoring: Always-on discovery and monitoring, providing granular insights from the system level down to individual cells.

- Integration with Privacy Software: Assists with risk assessments, records of processing activities (ROPAs), and privacy impact assessments (PIAs).

- Customizable Privacy Notices: Allows businesses to customize privacy notices and consent banners.

Why do we recommend it?

We recommend Ketch for its comprehensive, user-friendly data mapping tool that simplifies privacy compliance. It provides clear visibility into data flows, helping businesses manage risks, comply with regulations like GDPR and CCPA, and automate data assessments, ensuring ongoing privacy compliance with minimal effort and complexity.

With Ketch, users can quickly identify data risks and optimize data usage, reducing reliance on manual processes like spreadsheets. The platform simplifies data assessment, helping businesses stay ahead of regulatory requirements. It ensures that data is handled responsibly by offering detailed mapping and automated updates as privacy laws evolve.

Ketch’s data mapping is highly integrated with its other privacy management tools, providing a unified approach to data governance. The tool streamlines the assessment of data access, retention, and transfer, making it easier to document privacy practices and comply with the increasing complexity of data protection laws.

This feature is especially valuable for enterprises looking to maintain privacy compliance across various departments. It supports transparency by ensuring that businesses have a clear overview of data practices, empowering them to address potential risks proactively and avoid costly fines related to privacy violations.

Who is it recommended for?

Ketch is recommended for businesses of all sizes that need to manage data privacy and compliance effectively. It’s particularly valuable for organizations handling large amounts of personal data, requiring detailed data mapping, and aiming to stay compliant with privacy regulations like GDPR and CCPA across multiple platforms.

Pros:

- Automation and Efficiency: Automated data mapping significantly reduces manual workload, saving time and resources.

- Consistency and Accuracy: Provides precise and up-to-date data classification and mapping, enhancing data integrity and compliance.

- Scalability: Capable of handling large volumes of data across multiple platforms, making it suitable for businesses of all sizes.

- User-Friendly Interface: Designed to enable users to navigate and utilize the platform effectively without extensive technical knowledge.

- Strong Customer Support: Ketch provides extensive self-help guides, usage tutorials, and a live support team who are happy to help with onboarding and fine-tuning.

- Cons:

- Learning Curve: Depending on your chosen plan, setting up the system could take quite an effort.

Ketch makes its services accessible to all sizes of businesses, though it should be noted that the platform is particularly aimed at the data control needs of websites. There are four plan levels and the first of these is Free. You can get a free trial of the Starter edition.

EDITOR'S CHOICE

Ketch is our top pick for a data mapping software package because it offers a powerful, solution for online businesses to manage their data privacy and compliance needs. The platform simplifies the often complex process of mapping data flows across various systems and departments, ensuring that websites can efficiently track and document how personal data is collected, processed, and stored. With growing privacy regulations like GDPR and CCPA, Ketch’s data mapping feature is essential for businesses looking to stay compliant and mitigate risks. Ketch’s automation capabilities reduce the need for manual data mapping and ensure that businesses are always up to date with evolving privacy laws. The platform automatically detects changes in data flows and updates data maps in real time, making it easier for organizations to stay ahead of regulatory requirements. This reduces administrative workload and enhances accuracy. Ketch offers a unified approach by integrating its data mapping tool with other privacy and compliance features, such as consent management and reporting. This provides businesses with a comprehensive view of their data practices, allowing them to proactively address privacy risks.

Download: Access the FREE Trial

Official Site: https://app.ketch.com/sign-up

OS: Cloud-based

2. Fivetran HVR

Fivetran HVR is a high-performance data replication and integration solution designed for enterprises needing real-time data delivery and analytics. Now part of Fivetran’s suite of offerings, HVR is known for its ability to handle complex data architectures, making it a go-to choice for organizations with demanding data pipelines.

Key Features:

- High Performance for Real-Time Replication: Change Data Capture (CDC) for near real-time data replication.

- Remaps and Reformats Data: Enables data from different sources in different formats to be collected into one group.

- Broad Platform Support: Can extract data from or insert into Oracle, SQL Server, SAP HANA, Snowflake, and more.

- Hybrid Environments: Can move data between platforms, including cloud systems and on-premises servers with different operating systems.

- Data Centralization: The software offers a centralized console for managing replication tasks, which simplifies setup and monitoring.

Why do we recommend it?

Fivetran HVR is a data movement facility that can extract records from databases, transform them in some way and then insert them into some other system. This can be used to create data lakes for analysis or for processing data for use in an ERP. Tasks can be used to add context to data, verify it, or deduplicate it as well as to replicate it.

One of the core use cases for the Fivetrans package is to feed enterprise data warehousing applications. This is where it qualifies as a data mapping system, which is what qualifies it for this list. This function is becoming increasingly important as AI systems advance because those types of applications rely heavily on large amounts of data that news to be categrized in many different ways.

HVR is well-suited for replicating data from on-premise systems to cloud data warehouses like Snowflake or BigQuery. It isn’t limited to creating data warehouses. It can be used to replicate data for distributed databases or duplicate data for failover and backup systems.

Ironically, HVR is also very useful for dedupicaiton, identifying where the same data is held in different places. Such scenarios can be dangerous because local stores can be charnged, leading to different versions of data giving different information to different users who think that they are operating on a common pool.

Who is it recommended for?

This package is useful for any business that needs to consolidate data stores or move data from one application to another. Any application oyther than straightforward replicaiton requires some degree of transformation and that involves identifying the meanings of data fields so that commonality can be iderntified. Small business should consider the Free plan.

Pros:

- Data Security: Uses compression and encryption during data movements – the security of data at rest depends on the applications in which you hold data.

- Resilience and Continuity: Can be used to back up data and includes automatic recovery from interruptions, ensuring data integrity.

- Real-Time Analytics Enablement: By enabling real-time data flows, HVR supports operational dashboards and streaming analytics.

- Scalability: HVR is built to handle large-scale enterprise workloads.

- Data Governance: Enables control over data access and the preservation of original information to protect data integrity.

Cons:

- Complex Pricing Model: HVR’s pricing can be a deterrent, particularly for smaller organizations or those seeking straightforward cost predictability.

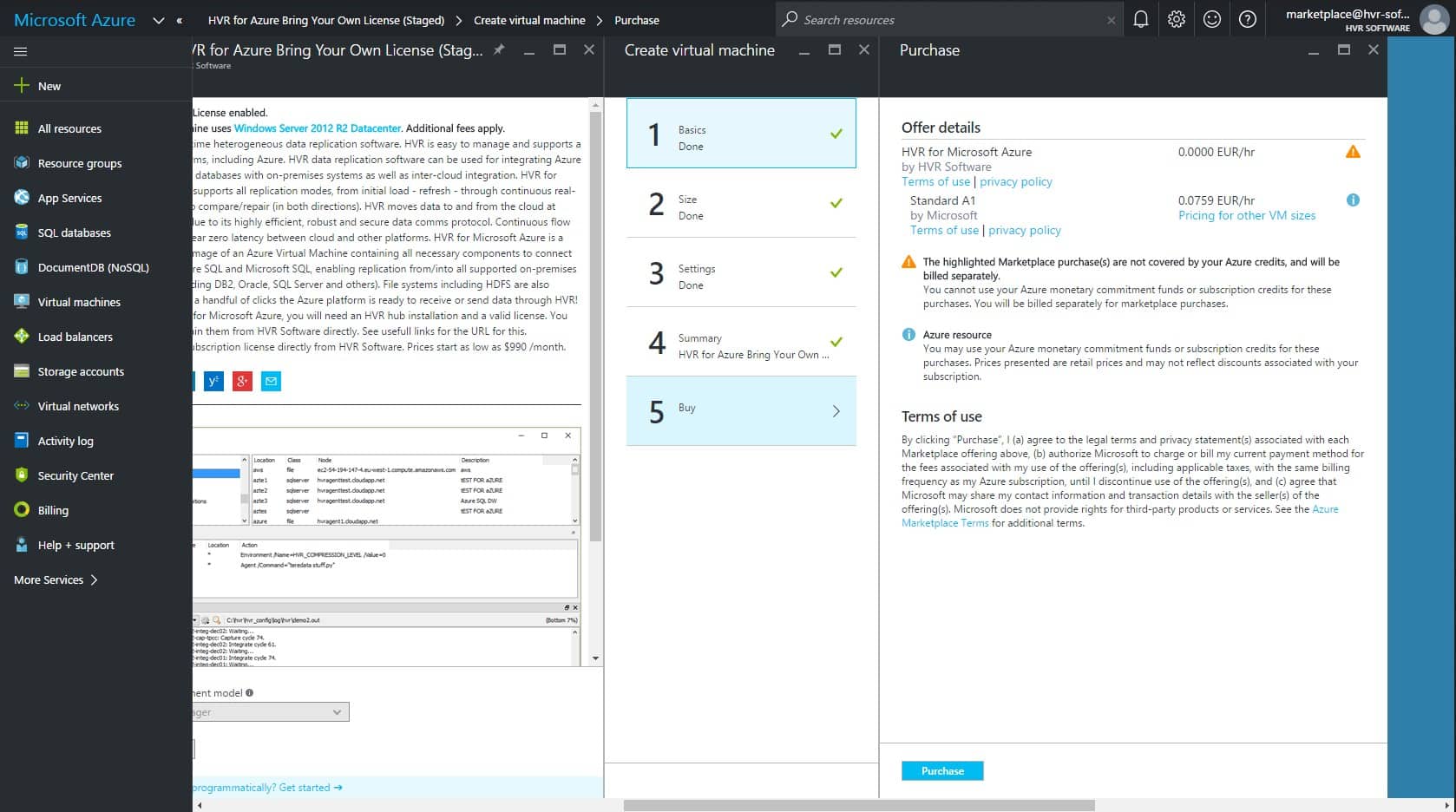

Fivetran HVR can interact with on-premises systems and cloud platforms. Data can be moved between data stores in any location. The HVR software itself can be hosted on your own servers or on a cloud platform – specifically AWS and Azure. The on-premises option runs on Windows Server or Linux. Fivetran offers a Free edition of HVR. This will handle up to 500,000 records per month. You can get the unlimited version on a 14-day free trial.

3. HVR

HVR – a data mapping tool that delivers data mapping in any architectureHVR delivers on their motto – “Fresh data. Where you need it.” This is a tool that can handle large amounts of data and have complex ETL requirements. It is designed for medium to large enterprises.

Key Features:

- Real-Time Data Mapping and Replication: Facilitates the handling of high data volumes with real-time mapping and replication across various architectures.

- Cloud and On-Premises Integration: Enables bi-directional data movement between cloud environments and on-premises solutions, supporting seamless data integration.

- Change Data Capture (CDC): Offers real-time CDC capabilities, allowing for immediate analysis and replication of data changes across systems.

- Versatile Data Movement: Capable of migrating data across both homogeneous and heterogeneous environments efficiently, ensuring flexibility in data handling.

- Broad DBMS Compatibility: Supports log-based change data capture for a wide range of Database Management Systems, including SQL Server, Oracle, and more.

Why do we recommend it?

HVR stands out for its robust real-time data mapping and replication capabilities, making it an invaluable tool for enterprises dealing with large data volumes and complex integration needs. Its support for both cloud and on-premises data movement, along with real-time CDC, provides businesses with the flexibility and immediacy needed for effective data analysis and decision-making. HVR’s ability to handle data across various architectures and platforms makes it a top recommendation for organizations aiming to maintain fresh and actionable data insights.

It is a data integration solution packed with features that make it easy for administrators to control various architectures, work with numerous data types, and present it all in insightful statistical reports.

Who is it recommended for?

HVR is recommended for medium to large enterprises that require dynamic data integration and replication across multiple platforms and environments. It is particularly suited for businesses looking to leverage real-time data movement and change data capture to drive analytics, decision-making, and seamless data migration between transactional databases, data lakes, and cloud environments.

Pros:

- Scalability and Flexibility: Excellently manages large volumes of data and complex ETL processes, adaptable to various data architectures.

- Continuous Data Analysis: Real-time data movement and CDC enable continuous monitoring and analysis of data changes, enhancing decision-making.

- Comprehensive Integration: Facilitates robust integration capabilities, allowing for efficient data movement between diverse environments and platforms.

Cons:

- Complexity for Smaller Enterprises: While ideal for medium to large enterprises, the complexity and scale of HVR may be daunting for smaller organizations.

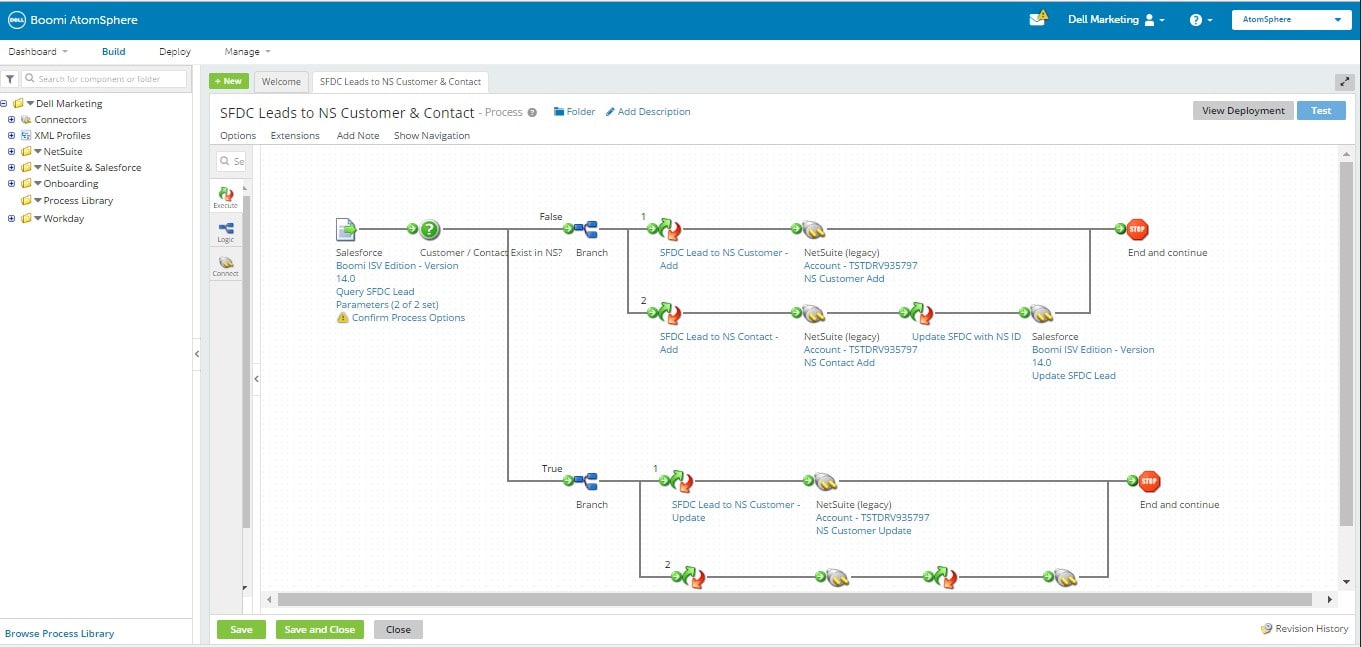

4. Boomi AtomSphere

Boomi AtomSphere – the data mapping tool that has no data limits or networking boundaries.Boomi AtomSphere is an on-demand multi-tenant cloud integration platform that syncs and replicates data between apps, SaaS, databases, and more. It offers a cloud integration platform that integrates different applications with the help of a library of pre-built solutions or customized ones for more unique requirements.

Key Features:

- User-Friendly Interface: Boomi AtomSphere is designed with a drag-and-drop UI, making it accessible for users of all technical levels to sync and replicate data.

- Cloud-Based Integration Processes: Allows for the creation of “Atoms” to facilitate data transfer between cloud and on-premises applications, tailored to specific integration needs.

- Future-Proof Testing with Boomi Assure: Ensures that integration processes remain functional across new AtomSphere releases.

- Bulk-Copy Feature: Supports loading large volumes of data into databases, enhancing the efficiency of data management tasks.

- Centralized Project Management: Offers a platform for managing a vast number of integration projects in one central location.

Why do we recommend it?

Boomi AtomSphere stands out for its intuitive drag-and-drop interface and robust integration capabilities, making it a formidable solution for businesses seeking to bridge the gap between disparate systems and data sources. Its cloud-first approach, coupled with features like Boomi Assure and bulk-copy functionality, positions it as a future-proof platform capable of handling extensive data volumes with uncompromised security. This combination of ease of use, scalability, and advanced integration features makes Boomi AtomSphere a recommended choice for medium to large organizations looking to streamline their data management processes.

Who is it recommended for?

Boomi AtomSphere is ideally suited for medium-to-large businesses and enterprises that require a powerful yet accessible platform to integrate cloud and on-premises applications. It is particularly beneficial for organizations with extensive data management needs, such as those using Oracle E-Business Suite or Hadoop HDFS. Businesses seeking a scalable and secure cloud integration solution that can centralize and simplify their data syncing and replication efforts will find Boomi AtomSphere to be an invaluable tool.

Pros:

- Ease of Use: Simplifies data syncing and replication for non-technical users, encouraging broader adoption within organizations.

- Versatile Integration Capabilities: Facilitates seamless connections between a wide range of applications, databases, and systems.

- Scalability: Well-suited for medium to large businesses and enterprises, supporting extensive data management needs.

- Cloud-First Architecture: Built on a sophisticated distributed architecture that ensures robust security for cloud integrations.

Cons:

- Learning Curve for Advanced Features: While the basic operations are user-friendly, mastering the platform’s more advanced capabilities may require additional learning time.

Try Boomi AtomSphere for FREE for 30 days.

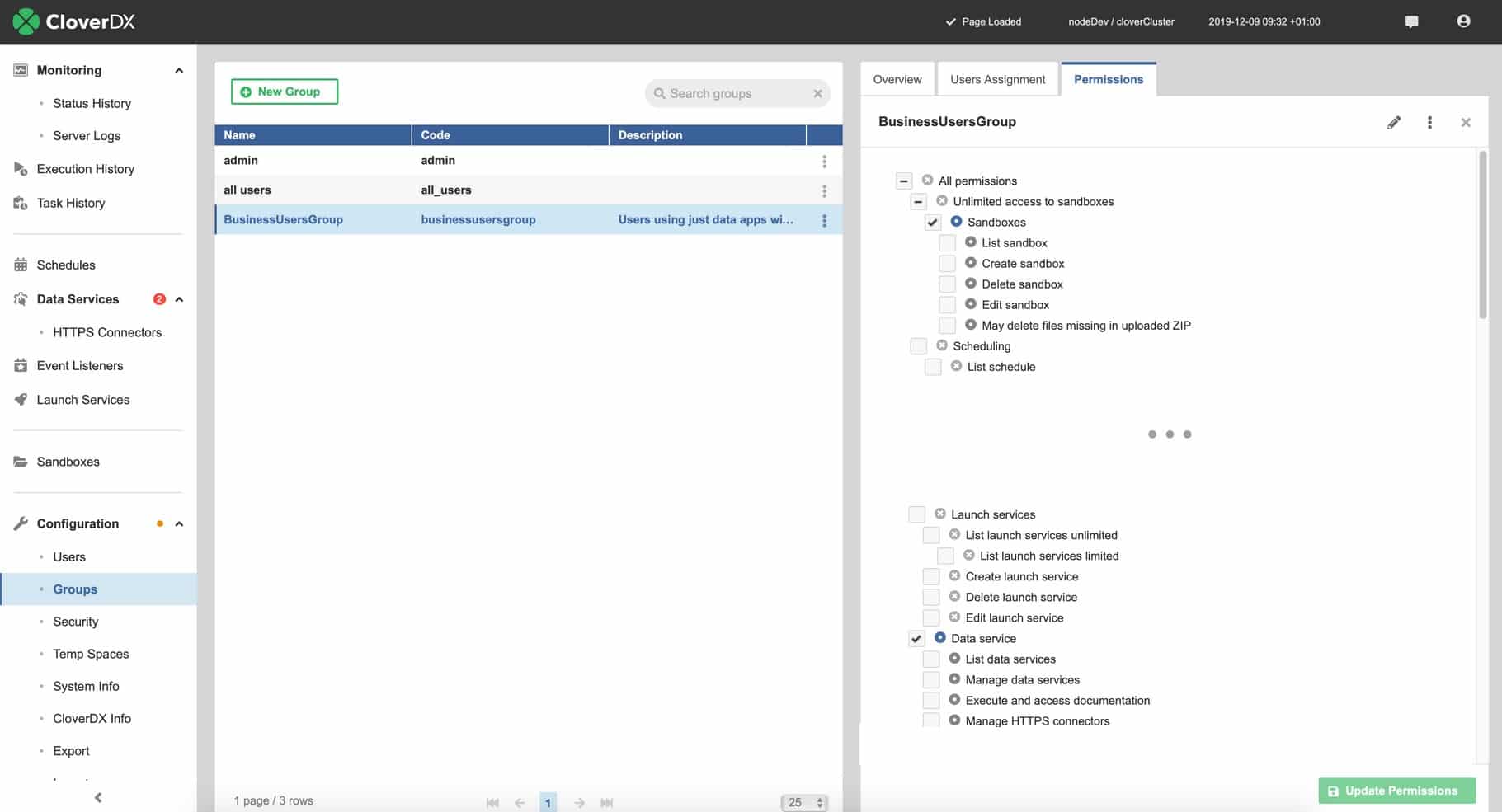

5. CloverDX

CloverDX promises to map data regardless of data types, deployments, architectures, and storage platforms.CloverDX boasts that it can connect to “just about any data source or output.” It is an easy-to-use tool that supports on-premises, cloud, multi-cloud, and hybrid deployments. Once deployed, it is easy to master the platform and start creating, automating, running, and publishing data pipelines.

Key Features:

- Versatile Integration Capabilities: CloverDX boasts the ability to connect to virtually any data source, output, or storage platform, emphasizing its flexibility across data types, deployments, and architectures.

- End-to-End Automation: Offers a blend of visual and coded design approaches, enabling both beginners and advanced users to efficiently validate, transform, and move data.

- Developer-Friendly Tools: Provides a comprehensive design tool for creating, debugging, running, and troubleshooting data flows and ETL processes with precision.

- Flexible Deployment Options: Supports deployment both on-premises and in the cloud, including multi-cloud and hybrid environments, ensuring adaptability to various enterprise needs.

- Customizable Enterprise Data Management: Integrates seamlessly into complex tech stacks and automation requirements, making it suitable for a wide range of enterprise projects.

- Built-In Data Quality Features: Includes features to ensure data cleanliness in real-time, with automatic checks for conformity to predefined rules and validation of data formats.

Why do we recommend it?

CloverDX is recommended for its exceptional flexibility and comprehensive integration capabilities, making it a standout choice for enterprises faced with complex data mapping needs. Its blend of visual and coded design tools, alongside developer-friendly features for creating and managing data flows, positions CloverDX as a highly adaptable and efficient solution for end-to-end data integration and quality assurance.

Who is it recommended for?

CloverDX is ideally suited for medium to large enterprises that require a robust data management platform capable of handling complex integration, automation, and data quality tasks across diverse environments and tech stacks. Organizations looking for a customizable and scalable solution to support their on-premises, cloud, multi-cloud, or hybrid deployment strategies will find CloverDX to be an invaluable asset in their data management toolkit.

Pros:

- High Integration Flexibility: Its ability to connect with almost any data source provides unparalleled flexibility for data mapping projects.

- Ease of Use and Mastery: The platform’s user-friendly design aids in quick learning and efficient data pipeline creation and automation.

- Customization and Scalability: CloverDX’s high customizability and scalability make it a fit for complex enterprise environments and tech stacks.

- Data Quality Assurance: The platform’s focus on data quality ensures clean, reliable data output, enhancing overall data integrity.

Cons:

- Complexity for Smaller Projects: While highly customizable, the platform’s extensive features and capabilities might be more than is required for smaller, simpler projects.

Try CloverDX FREE for 45-days or book a demo.

6. IBM InfoSphere DataStage

IBM InfoSphere DataStage is a mapping tool from a company that specializes in data storage and security.IBM InfoSphere DataStage is an ETL tool and part of the IBM InfoSphere Information Server. It uses graphical UIs to create data integration solutions. Coming from a company that has made data storage its bread and butter, it shouldn’t be a surprise that they have put all their knowledge about data mapping into their tool.

Key Features:

- Intuitive Graphical Interfaces: Features easy-to-navigate UIs for creating data integration flows, minimizing the need for coding.

- Scalable Data Processing: Offers substantial data processing capabilities with built-in auto workload balancing for high-performance computing.

- Wide Range of Integrations: Seamlessly integrates with various IBM products, including IBM Cloud Pak for Data, enhancing data virtualization, business intelligence, and governance.

- Flexible Data Delivery: Capable of delivering processed and transformed data to multiple destinations, including data warehouses, operational data stores, and real-time web services.

- Automated Job Generation: Allows users to auto-generate jobs and enforce custom rules for data processing and delivery.

- Data Quality Management: Utilizes IBM InfoSphere QualityStage to resolve data quality issues automatically, ensuring clean data delivery to target environments.

Why do we recommend it?

IBM InfoSphere DataStage is recommended for its robust data processing and integration capabilities, backed by IBM’s extensive experience in data storage and security. Its intuitive graphical interfaces, coupled with powerful scalability and seamless integration with IBM’s suite of data management tools, make it an exceptional choice for enterprises seeking to streamline their ETL processes. The tool’s emphasis on data quality and its ability to automate job generation and enforce rules further enhance its value, offering users a comprehensive solution for complex data integration challenges.

Who is it recommended for?

This tool is particularly suited for medium to large enterprises that require a scalable and high-performance data integration platform. Organizations already invested in IBM’s ecosystem, or those looking to leverage IBM Cloud Pak for Data and other IBM services for data virtualization, business intelligence, and governance will find InfoSphere DataStage to be a compelling choice. It is also ideal for businesses prioritizing data quality and efficiency in their data integration and management processes.

Pros:

- User-Friendly Design: Simplifies the data integration process with its graphical interfaces, making it accessible to users of different skill levels.

- High Performance and Elasticity: Its scalable architecture ensures efficient data handling and computing resource management.

- Comprehensive Integration Capabilities: Offers robust integration options, particularly with IBM’s native products, facilitating a broad range of data operations.

- Versatile Data Handling: Enables the delivery of data to a variety of systems and platforms, supporting diverse operational and analytical needs.

Cons:

- IBM Product-Centric: While offering extensive integration options, its close tie-in with IBM products might limit flexibility for users entrenched in other ecosystems.

Try IBM InfoSphere DataStage for FREE.

7. Oracle Data Integrator

Oracle Data Integrator is the tool for the secure processing of large-sized data mapping.Oracle Data Integrator is a comprehensive data integration platform from one of the leading technology companies. It covers all data integration requirements: high-volume, high-performance batch loads to event-driven, trickle-feed integration processes and SOA-enabled data services.

Like most Oracle products, this too is targeted at larger enterprises that can invest in the tool and the technology needed to run it.

Key Features:

- Enhanced Developer Productivity: Boasts a flow-based declarative user interface that simplifies control and expedites project delivery.

- Seamless Oracle Cloud Integration: Integrates effortlessly with Oracle Cloud Infrastructure (OCI) services, ensuring secure data storage and management.

- Comprehensive Knowledge Modules: Utilizes Knowledge Modules (KMs) for defining and executing integration processes, enhancing efficiency and adaptability.

- Oracle ERP Cloud Compatibility: Works in tandem with Oracle Enterprise Resource Planning (ERP) Cloud, supporting key business processes.

- Advanced Data Processing: Features RESTful Service integration and employs data chunking and pagination for handling large data payloads efficiently.

- Dimensional Data Enhancement: Introduces Dimensions and Cubes for organizing and storing data, optimizing the ETL process for detailed analysis.

- Release Management: Implements a structured release management process for safe and tested deployment of projects from development to production environments.

Why do we recommend it?

Oracle Data Integrator is recommended for its comprehensive approach to data integration, offering high developer productivity through its intuitive user interface and seamless integration with Oracle Cloud Infrastructure and ERP Cloud. Its use of Knowledge Modules and advanced data processing capabilities makes it a highly efficient tool for managing large data volumes. The platform’s emphasis on secure connectivity and structured data organization positions it as a leading solution for enterprises that prioritize detailed data analysis and secure data management within the Oracle ecosystem.

Who is it recommended for?

Oracle Data Integrator is ideally suited for larger enterprises that rely on Oracle’s technology stack and require a powerful data integration platform to handle high-volume, high-performance data loads. Organizations that leverage Oracle ERP Cloud and seek a seamless integration experience across Oracle Cloud Infrastructure will find this tool particularly beneficial. It’s also well-suited for businesses looking for robust release management features to ensure smooth transitions from development to production environments.

Pros:

- Optimized for Oracle Ecosystems: Designed to integrate seamlessly with Oracle’s suite of products, offering a unified data management solution.

- Scalable Data Integration: Capable of handling high-volume and high-performance data loads, suitable for large enterprises with extensive data requirements.

- Comprehensive Integration Capabilities: Supports a wide range of data integration needs, from batch loads to real-time processing and SOA-enabled data services.

- Enhanced Data Organization: Empowers organizations to structure data effectively for detailed analysis, leveraging dimensional objects for in-depth insights.

Cons:

- Oracle-Centric Focus: May present integration challenges for businesses not fully invested in the Oracle ecosystem or those using diverse technology stacks.

Download an evaluation version of Oracle Data Integrator for FREE.

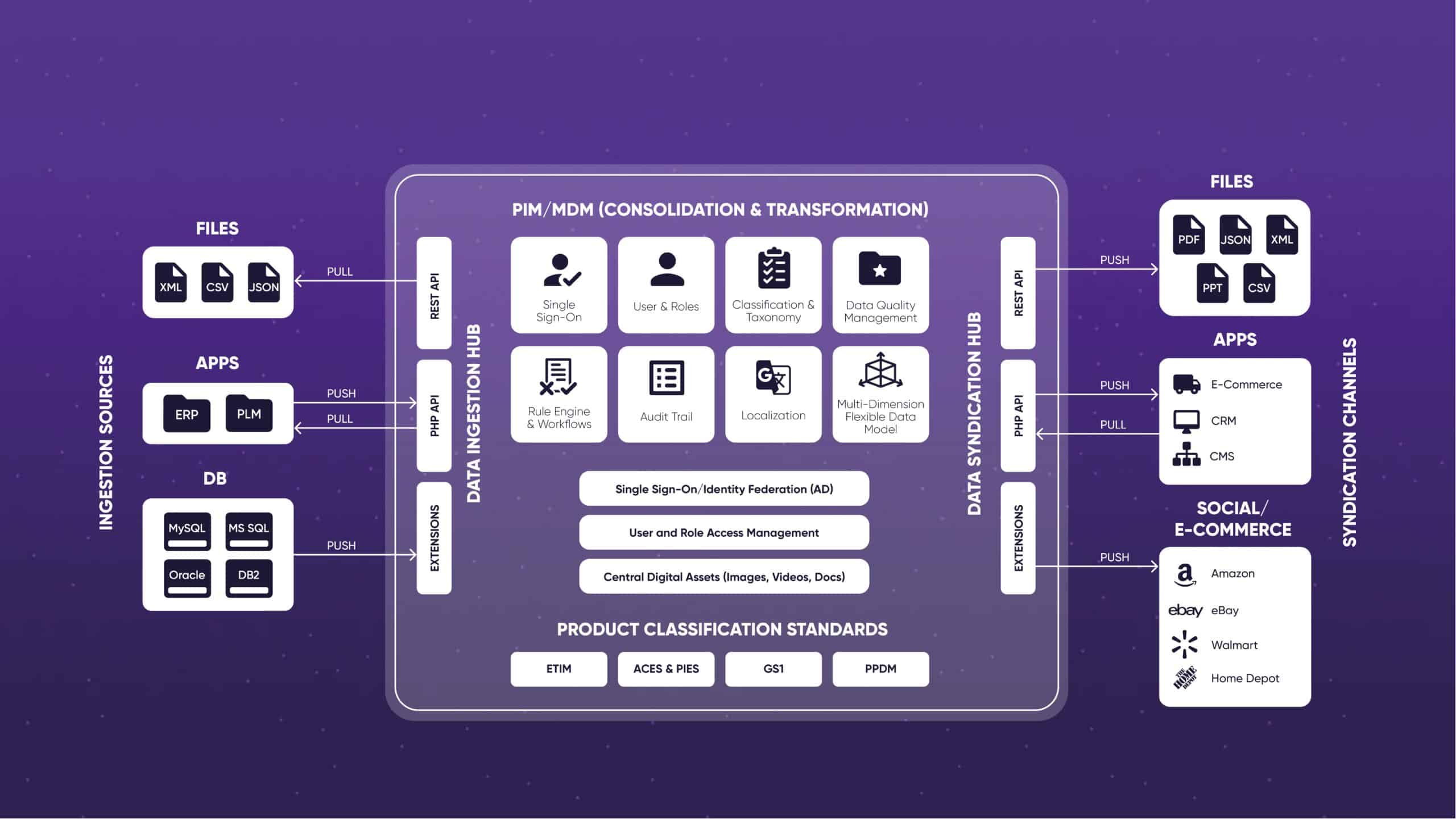

8. Pimcore Product Information Management (PIM)

Pimcore Product Information Management (PIM) – a data mapping tool that can be used for marketing.Pimcore Product Information Management (PIM) is designed to bind all the data on a business network and bring them together to be presented as up-to-date product data. It was developed using Enterprise Open Source technology and powered by easy-to-use APIs, which come together to allow for the retrieval of all data in real-time.

Key Features:

- Seamless Integration: Effortlessly integrates with any architecture, promoting data reuse and functional extension.

- Wide Connectivity: Compatible with a vast array of systems, including ERP, CRM, BI, and various third-party solutions.

- Offline Data Compatibility: Supports importing from and exporting to offline data sources such as MS Excel and CSV files, with features for automatic data matching and complex transformation rules.

- Low-Code Configuration: Offers a low-code environment that still enables powerful data importation features from external sources.

- Flexible Data Management: Allows administrators to define strategies for data updates, deployment, and cleanup efficiently.

- Status Alerts and Logging: Provides timely alerts and detailed logs to monitor processes and troubleshoot issues effectively.

- Extensive Channel Distribution: Enables the delivery of product information and content across over 1,500 marketing, shopping, retail, and business channels.

Why do we recommend it?

Pimcore Product Information Management (PIM) is highly recommended for its exceptional ability to unify data across a business network, providing real-time, up-to-date product information. Its robust integration capabilities, coupled with extensive support for both online and offline data sources, make it a versatile tool for any organization looking to enhance their product data management and marketing strategies.

Who is it recommended for?

Pimcore PIM is ideally suited for businesses of all sizes that require a cohesive platform for managing and distributing product information across various channels. It is particularly beneficial for organizations with complex data ecosystems involving multiple systems like ERP, CRM, and BI. Companies looking to maximize their product visibility across a wide range of marketing, shopping, and business channels will find Pimcore PIM’s extensive channel support invaluable for their marketing and sales strategies.

Pros:

- Universal Data Integration: Its ability to integrate with a broad spectrum of systems ensures comprehensive data connectivity.

- Efficient Offline Data Handling: The platform’s capacity to interact with offline data sources enhances flexibility in data management.

- Powerful yet Accessible: Strikes a balance between offering powerful features and maintaining a user-friendly, low-code interface.

- Strategic Data Management: Facilitates strategic planning for data updates and distribution, streamlining operations.

- Comprehensive Channel Support: Its support for an extensive range of channels amplifies product visibility and marketing potential.

Cons:

- Learning Curve for New Users: Despite its low-code approach, new users may require time to fully grasp its wide range of functionalities.

Get a Pimcore Product Information Management (PIM) demo for FREE.

9. Talend Data Integration

Talend Data Integration – a flexible enterprise-level data mapping tool that integrates into any complex infrastructure.Talend Data Integration is a data integration software solution that allows for ETL and data integration across enterprise-level cloud or on-premises architectures.

Talend is a scalable tool that offers over 900 pre-built components and can easily integrate into any corporate network environment.

Key Features:

- Automated Data Workflows: Talend automates integration, transformation, and mapping processes, ensuring clean and secure data flows.

- Universal Data Handling: Capable of processing any data type from any source to any destination, whether on-premises or cloud-based.

- Pipeline Designer: Features a user-friendly interface for designing, deploying, and reusing data pipelines across various environments.

- Data Fabric and Inventory: Provides tools for obtaining, managing, and sharing clean and controlled data, minimizing risks and enhancing data governance.

- Data Preparation Tools: Offers intuitive self-service tools for data organization and collaboration, simplifying the data preparation process.

Why do we recommend it?

Talend Data Integration is recommended for its comprehensive and flexible approach to data integration across enterprise-level architectures. Its automation of data workflows, coupled with the ability to handle diverse data types and sources, makes it a powerful tool for organizations seeking efficient and secure data management solutions. The inclusion of user-friendly design tools and robust data governance features further enhances its appeal, providing a solid foundation for managing complex data integration tasks with ease.

Who is it recommended for?

This solution is ideally suited for medium to large enterprises that require a scalable and flexible data integration platform capable of accommodating complex infrastructure and varied data sources. Organizations looking to streamline their ETL processes, enhance data governance, and deploy data pipelines across multiple environments will find Talend Data Integration to be an invaluable asset. It is particularly beneficial for data architects and IT professionals tasked with designing and managing extensive data integration workflows.

Pros:

- Scalability and Flexibility: Its scalability and over 900 pre-built components allow for seamless integration into any corporate network environment.

- Versatile Data Processing: Talend’s ability to handle diverse data types and sources ensures flexibility in managing data workflows.

- Enhanced Data Governance: The Data Fabric and Inventory features support comprehensive data governance and risk management.

- User-Friendly Design Tools: The Pipeline Designer and Data Preparation tools facilitate easy design and preparation of data pipelines and datasets.

Cons:

- Complexity for Beginners: The wide array of features and capabilities might present a steep learning curve for new users or smaller teams.

Try Talend Data Integration for FREE.

Who are the data mapping tools for?

The ideal candidates for deploying one of the seven data mapping tools we have just seen are businesses with:

Data sources from various incompatible systems with a variety of data types

Complex network architectures and remote source and destination data hosts

Requirements for accurate, comprehensive, and real-time reporting of the overall health of the business – including remote sites

A budget that has been set aside for the significant data mapping process that needs to be handled with accuracy and in an organized manner

These are the types of requirements that make it a must for adopting and deploying one of the seven best data mapping tools and software we have seen in this post.

Let us know what you think – leave us a comment below.