The field of Artificial Intelligence (AI) dates back to 1955. It has been around for a long time but it only existed in academic environments for much of its history. Whenever the media takes an interest in AI, the creative imagination of its writers extrapolate the potential of the technology. This attention occurs in waves and seems to happen once a decade. However, those fantastic possibilities projected by the media set up expectations that are too great to fulfill. So, AI disappoints and the media moves on to another hot topic until a decade later when the cycle starts again. The media attention to the potential of AI attracts investment into the field. Reports of its failings discourage investment.

AI is starting to have real-world applications and the technology has advanced far enough over the past 65 years so that it can start to produce impressive copy for journalists. This time, the AI revolution will be able to satisfy public interest for long enough that investment flows will continue to grow the discipline so that it can develop commercial products.

The application of AI is starting to improve performance in network management behind the scenes by contributing to more efficient data processing.

What is AI?

In academic circles, the definition of AI has developed into a precise meaning. In the early years of the discipline, the definition of AI was whether a machine hidden behind a screen could fool a person into thinking that he was communicating with another human being. This is known as the Turing Test.

Today, the industry and academic understanding of what AI is has narrowed to the application of heuristics and probability in the execution of programming code. By reducing the identification of AI to something more mundane, the adherents to the field have managed to tame the wild fantasies of journalists. Without the danger of others over-promising, AI specialists have a better chance of hitting more achievable targets.

AI is particularly suited to solving the current biggest problem in just about all fields of IT which is the time it takes to wade through masses of data.

You can’t spot a problem and fix it if you didn’t record its cause. However, you don’t know in advance what is going to go wrong. Therefore, IT systems have become very good at measuring and logging everything.

The first step in managing all of these system event records is to get a log management tool. The amount of data that strategy produces exceeds the capabilities of traditional programming methods to identify anything meaningful within a short enough period to make the results actionable. This is an ideal application for AI’s blend of computing’s speed and human mind-like heuristics.

The big advantage of computer programs is that they are methodical and computers don’t run out of patience or forget to do things like humans do. While human intelligence is disorganized, it cuts out a lot of unlikely options, getting to the likely solution very quickly.

If a car mechanic starts up a car and hears a click, he will say “It’s probably the starter motor.” A car analysis computer program would work through every single component on a car until it finds a fault. The “probably” in the mechanic’s initial diagnosis is the key to artificial intelligence; AI applies probability to program code. The mechanic knows not to waste time examining the bolts on the wheels of the car if the car won’t start. AI helps computer programs exclude unnecessary steps and get closer to a solution faster.

What is Machine Learning?

Machine Learning (ML) is a mechanism within the discipline of AI. If you ask a car mechanic how he knows that a car won’t start because of a fault in the starter motor and to not bother looking at the wheel bolts, he will respond that it is an obvious deduction. However, that instant problem recognition wouldn’t be so clear to a two-year-old. Machine learning provides that basis of knowledge of what is probable.

AI isn’t suitable for a lot of applications. However, thanks to ML, it is becoming a useful aid in cutting out time-wasting fault diagnosis and so it speeds up the delivery of results.

Once a problem has been identified, AI implements a strategy that is known as “triage.” This focuses resources on a specific area. For example, if the system identifies a user account that is displaying suspicious behavior, it is worth implementing deeper tracking techniques on that account. Applying the same intensity of scrutiny to all accounts would be a waste of resources.

AI and ML in network management

In network management, there are many issues that require a quick response. There are four areas of network management where AI is being used to address these time-sensitive problems. They are:

- Traffic management

- Performance monitoring

- Capacity planning

- Security monitoring

In most of these cases, ML is proving to be the biggest aide in addressing problems of all AI techniques.

Traffic management

Traffic management and performance monitoring are very closely linked. Traffic management services look at the flow of packets through network devices and network performance systems rely on status reports from those same devices.

Traffic shaping can help network managers to get the most out of physical performance. IT departments on a tight budget can plan capacity at lower than peak throughput as long as interactive applications, such as VoIP, surveillance cameras, and video conferencing systems get priority through the system’s switches. A common practice with voice traffic is to designate a VLAN for its management. This flows voice signals for the office telephone network down the same wires that carry data.

Not many switch producers have implemented AI in their onboard traffic management capabilities yet. However, D-Link is ahead of the competition in this field with its Auto Surveillance VLAN (ASV) and Auto Voice VLAN (AVV) system, built into its smart switches.

ASV is intended to create a channel for security camera video feeds that won’t get impacted by surges in data traffic. AVV channels voice traffic. These AI technologies enables the switch to automatically detect these two types of traffic and prioritize them, so the network manager doesn’t need to interface to switch to tag VLAN packets and specify how that traffic should be treated.

Cisco Systems is the leading network switch producer in the world. The company has AI products but it doesn’t implement artificial intelligence on board its switches. Instead, it offloads it into network monitoring and management software that runs on servers.

Network Monitoring

The Cisco approach to AI combines software and hardware products in a bundle, called Cisco DNA Assurance. This modular package shows that AI and ML can work with non-AI components to create an advanced solution. The physical element in this series is a line of switches, called Catalyst 9000. The switch is a data source for the AI service and doesn’t have any artificial intelligence capabilities itself.

The artificial intelligence feature in the bundle is called Cisco AI Network Analytics. This analyzes data from a range of sources, including metrics sampled from the switch, to produce solutions for detected network performance issues.

The AI service doesn’t directly impact network monitoring data gathering. Instead, it sifts through correlated data from different sources to identify the source of problems. This is an example of how AI processes improve the speed of existing services.

Traditional network performance monitoring

Network performance monitors have always collected status reports from switches and relayed these into graphics on the network management console. Typically, network monitors use the services of the Simple Network Management Protocol (SNMP). This is an efficient system that relies on periodic status reports that are generated by the switches and routers on a network. The network management console controls the frequency of reporting but an emergency situation allows that network device to send an immediate report.

SNMP-based monitoring is comprehensive and doesn’t really need to be replaced by AI procedures. However, root cause analysis can be improved. This is why Cisco chose an AI strategy that places new techniques in an analysis module.

Network performance vs traffic analysis

Root cause analysis can be a time-consuming task and it usually relies on human decision-making. An investigation into what is causing performance problems can involve a series of traffic throughput calculations and an incomplete solution can push a capacity problem from one node in the network over to another.

Network traffic analysis techniques involve processing a lot of data because one of the data sources for this process is the actual traffic passing over a network. Capturing packets and storing them generates a lot of data and requires a lot of storage space. Sorting through all of the stored packets to look for useful information can take a long time and deciding what information is relevant to the task of performance improvement is a matter of judgment.

Traffic analysis is an area of network management where AI and machine learning can really help. An ML process can run constantly, recording cumulative results while watching live throughput statistics. Such a strategy can enable incremental adjustments to routes and also to traffic shaping measures, such as queuing and application prioritization.

Autonomous nodes vs coordinated traffic management

D-Link performs its dynamic AI-based traffic management process on the switch, while Cisco implements these tasks out-of-stream. On the one hand, the D-Link strategy is more immediate and seems to be a faster and simpler solution than the Cisco approach.

Independent decision-making by network devices works very well for routing on the Internet. Onboard AI services for traffic management could also operate efficiently if all of the devices on the network run the same protocols. So, the D-Link strategy is a good one if every switch is provided by D-Link and comes from the same AI-enhanced series.

The efficiency of onboard AI traffic management systems breaks down when faced with capacity limits because private networks have a finite capacity. The Cisco strategy of watching all switches from a centralized monitor has more potential for wider solutions to traffic issues and is the only way that full-capacity planning can be supported.

Cisco isn’t the only hardware provider to combine traffic monitoring, traffic management, and capacity planning in one AI-supported network management suite. In fact, Juniper Networks takes all of these functions and adds on another AI-based feature, which is natural language processing.

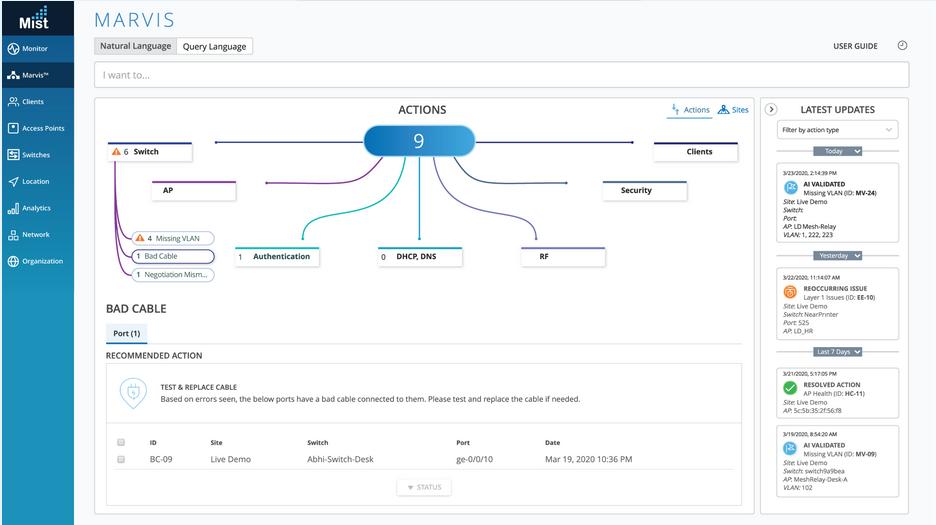

The Juniper Networks Marvis Virtual Network Assistant is a voice-activated chatbot that can implement network monitoring and provide solutions to immediate problems. The use of AI for chatbots is discussed in more depth below. The Mavis system uses a voice command interface to access a database of solutions. This is a similar concept to the Voice Search facility of Google and it implements ML to narrow down the most likely solution to the problem raised by the user.

Over time, records of previous problems experienced by the system enable the Marvis search engine to focus on related issues for subsequent searches, delivering more likely solutions. Traffic analysis and capacity planning are not considered to be urgent tasks. However, it is very time-consuming and requires many variables to be adjusted in order to plan for every possible traffic scenario.

The Mavis system is based on the Juniper Networks Mist AI system. This also supports an analytics module that searches through recent logs to collect performance data that can identify a problem. Mist AI also activates reporting tools that are resident on switches to gain deeper insights into the root cause of performance problems.

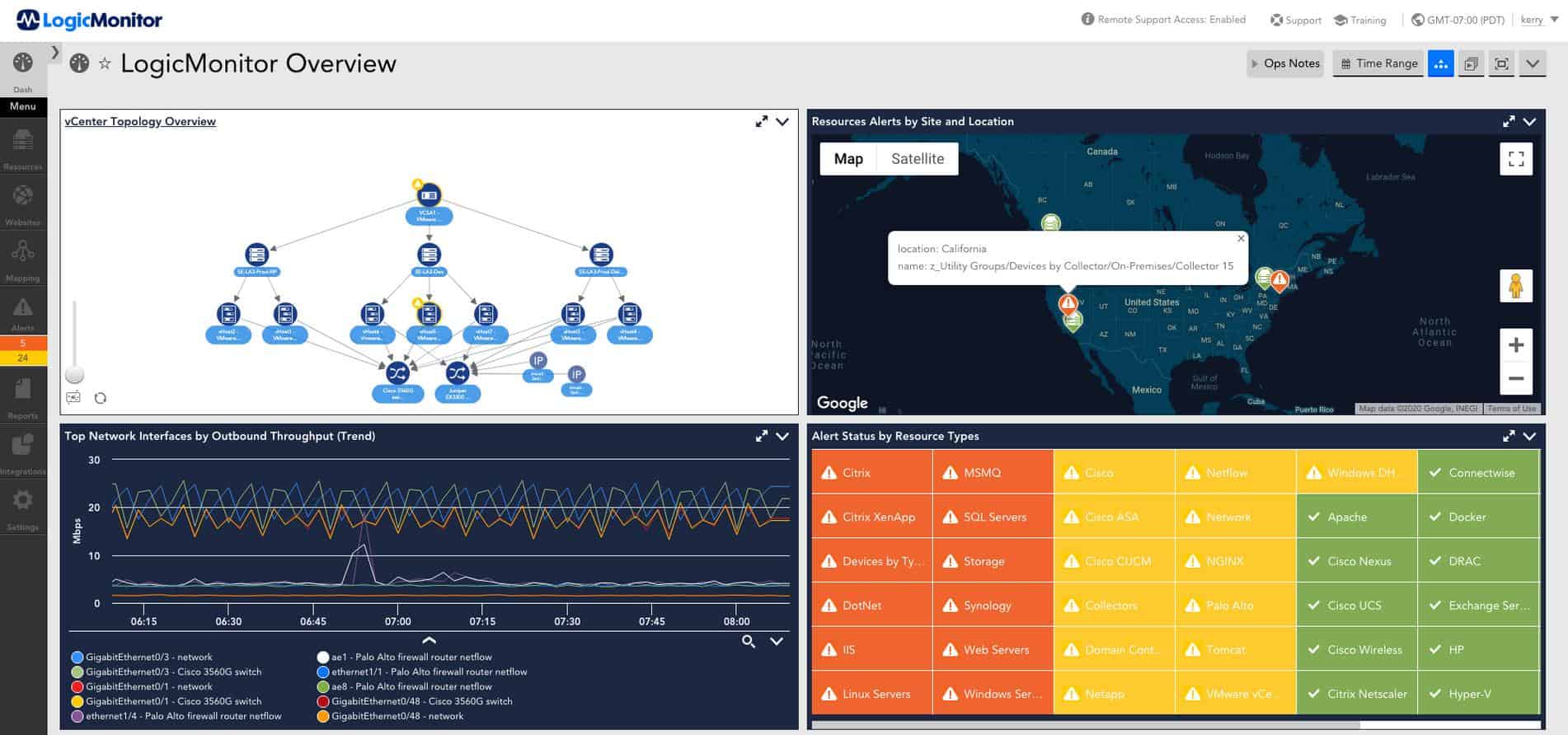

Cisco Systems and Juniper Networks don’t have exclusive access to the reporting functions built into their network devices. Third-party network monitoring tools also take advantage of these sources of information. LogicMonitor is a cloud-based network monitoring system that combines the benefits of network performance monitoring, which is SNMP-based, and traffic analysis, which works on packet sampling. As with the Cisco Systems and Juniper Networks network monitoring system, LogicMonitor uses AI for root cause analysis.

The LogicMonitor system uses ML processes to adjust performance alert thresholds over time and reduce the number of alerts generated by the system to provide a more accurate grading of events.

Capacity planning

Capacity planning requires many more inputs than immediate device health monitoring. This is another area of network management that really benefits from AI and ML processes. Retrospective data study can be carried out with less urgency to project traffic growth trends. However, unexpected equipment failure requires rapid re-routing and could require extensive traffic management measures to be implemented immediately.

The complexity of long-term capacity planning and the speed needed for immediate failure-driven re-planning creates pressure on network staff and requires automated analysis tools. AI and ML speed up capacity planning and traffic-shaping tasks. These additional functions are built into the AI products of Cisco Systems and Juniper Networks.

Capacity planning requires more than an expectation of traffic overall on a network. The load on each switch on the network needs to be considered. Expanding capacity in one area of a network can increase pressure on switches elsewhere. Therefore, capacity planning requires traffic flow simulations and a recursive process or switch-by-switch performance expectations. This process can be performed almost instantly through AI-based capacity planning tools.

Security monitoring

AI’s system of triage in data examination and ML experience-based performance expectations are both very important in new methods for security monitoring. There are two forms of security systems that can be greatly enhanced by AI and ML methods. These are Security Information and Event Management (SIEM) and User and Entity Behavior Analytics (UEBA).

SIEM tools

SIEM tools are made up of two modules that give the software category its name. These are SIM, which is Security Information Management, and SEM, which is Security Event Management. Security Event Management relies on network traffic analysis. It is the Security Information Management part of SIEM that really benefits from AI systems.

SIM involves examining log files for signs of malicious activity. The SIEM gathers all available log messages from every piece of equipment and software package operating for the business. These messages are “consolidated,” which means that they are put into a common format so that they can be searched as one single pool of data. This process requires a lot of heavy processing and AI methods cut down the processing time to identify malicious activity.

In security monitoring, speed is of the essence because there is no point in identifying a hacker once the damage has already been done. Therefore, the AI strategy of identifying patterns of activity enables large amounts of data to be searched in enough time to catch and block the malicious activity before too much damage is done.

AI-driven SIEM systems are quick enough to act as network performance monitors as well. After all, the SNMP reports that feed into network monitors are just a form of a log file. Dynatrace is an example of a network monitoring system that can use the same AI methods to operate as a performance monitor and a SIEM system.

User and Entity Behavior Analytics

UEBA is a method of tracking activity on a per-user and per-endpoint (entity) basis. The UEBA system logs each system access event and usage of files and resources for each user account and each IP address. The entity tracking can also extend to logging the actions performed by source IP addresses outside the network.

These records feed into a machine-learning routine. This is able to spot sudden changes in behavior and raises a flag on that account or IP address. This is the beginning of a triage process. So, full user surveillance measures are not needed for all users. These detailed tracking measures only start once a UEBA signal points to a specific account.

The UEBA strategy has become a standard element of many cybersecurity tools but it is particularly widely used in Intrusion Detection Systems (IDS) and Next-generation Anti-virus Systems (NGAV).

Intrusion Detection Systems

UEBA became very important for Intrusion Prevention Systems (IPSs), which are IDSs with automated mitigation actions attached to them. Early intrusion prevention systems would look for patterns of activity and then automatically shut down a user’s access if activity deviated from the norm. However, these systems were over-cautious and ended up blocking staff from doing their jobs and banning large sections of the population from accessing a website.

The people that wrote the rules that defined “normal activity” hadn’t sampled the everyday activity of every network in every business in the world. Therefore, what was deemed to be suspicious activity in the business that was studied by the software designers could be acceptable practices in other businesses. This situation is known as “false-positive reporting” and it reduced the attractiveness of IPSs.

Rather than defining “normal activity” in the software package, IPS designers included UEBA routines for baselining. UEBA eradicates the incidences of false-positive reporting. This innovation has improved the uptake of IPSs and attracted more developments in this field.

Next-generation anti-virus systems

The term “next-generation” when applied to products such a firewalls and anti-virus systems indicates that the product deploys UEBA for baselining. The AV industry almost died out because their strategy of providing a list of malware to look out for became overwhelmed by the high volume of new viruses that were generated.

A major problem with traditional AV systems was that at least one customer of the security package needs to be hit by a virus before the provider knows that the virus exists. It takes time to work out what a virus does and what to call it and during that research period, many other customers of the AV system get infected.

No one wants the risk of being the first customer to get a virus that a bought AV system can’t block. So, the entire endpoint protection industry was under threat because those services were not good enough. Adopting UEBA solved that problem.

With UEBA, AV services can spot a virus the first time it gets onto a protected system. This is because the “entity” part of User and Entity Behavior Analytics looks at all of the processes running on an endpoint and spots unusual activity.

AI for network management

AI is very well suited for some activities that network monitoring tools need to perform. In this field, customers should look at competent network management tools as a priority. Over time more and more of the best services in each category of network monitoring systems will turn out to have AI and ML processes working in the background, contributing to their success.

AI & ML in network management FAQs

What is AI and ML in networking?

Machine Learning (ML) is a discipline of Artificial Intelligence (AI). This technique tracks activity and records standard behavior and that is a key mechanism in the field of User and Entity Behavior Analytics (UEBA). UEBA can be used to look out for anomalous behavior in security monitoring and it can also be used to create trend analysis models for network capacity planning.

How is AI used in networking?

AI is particularly useful in the creation of virtual networks, such as software-defined networks because it can be used to identify potential bottlenecks and alter routing around that problem before network performance is damaged. Without AI, network managers usually only get notifications of traffic buildup once the system has already started to fail.

How could AI ML help in integrated network planning?

Machine Learning (ML) is a technique that is part of Artificial Intelligence (AI) and it can track activity, identifying each endpoint and protocol by its traffic volume. When planning a network, an engineer can examine current patterns and play through new types of traffic before adding on a service. It is also possible to generate trend analysis with ML to identify how demand will grow naturally even without adding on extra services or endpoints.